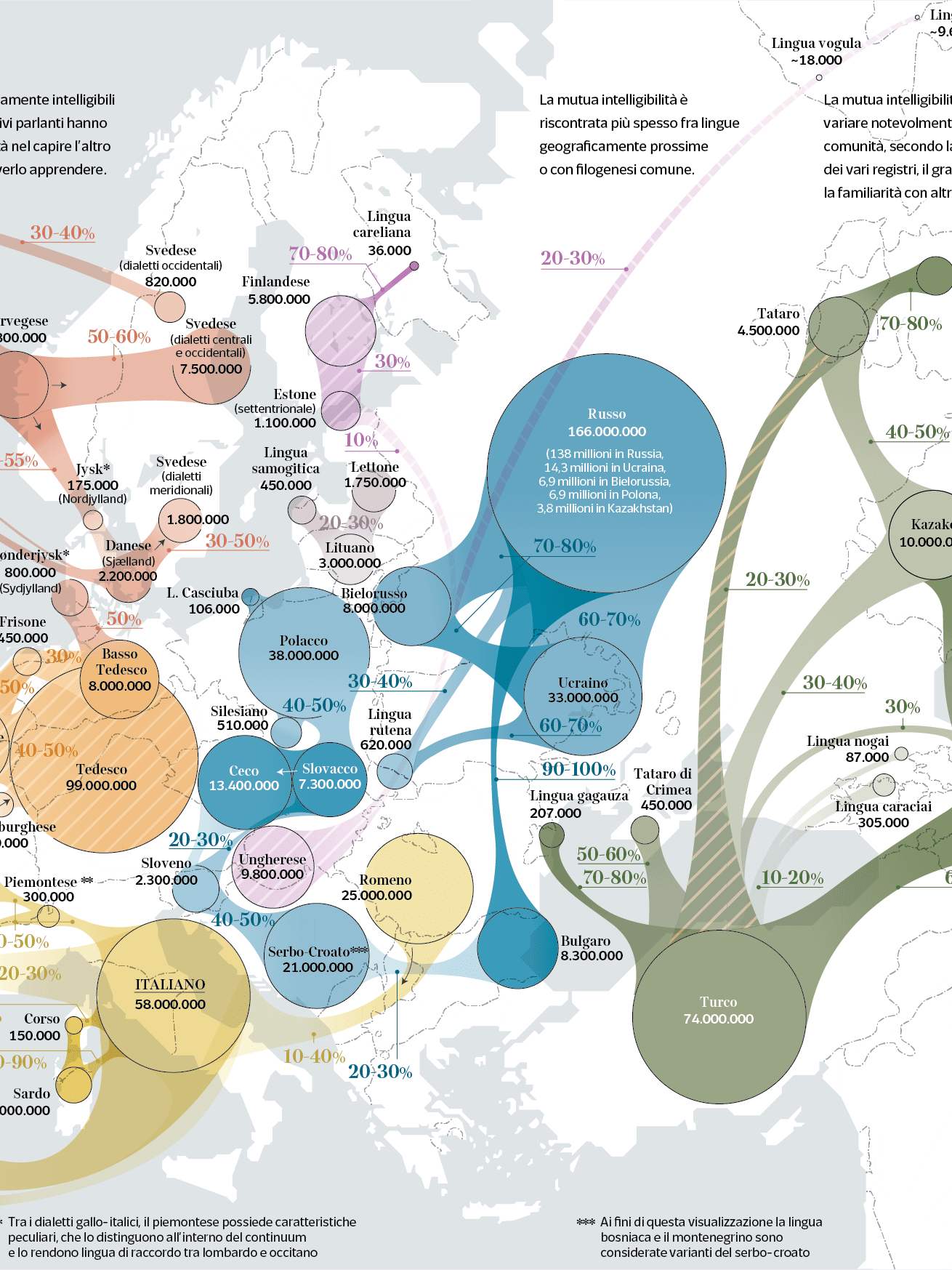

A new visualization on #Linguistics for @la_lettura n.604 on constructed languages through history: who composed them and why, how they are supposed to work, and what is the theoretical path behind that continuous and tireless work of brilliancy.

Constructed languages (or conlangs) are artificial languages purposefully designed for a specific use case. There can be - and there have been, throughout the last centuries - many reasons to create a constructed language, such as ease of human communication, linguistic experimentation, and artistic creation.

A famous one is Esperanto, created by the Polish physician L.L. Zamenhof in the late 19th century as an auxiliary language for people of different ethnic backgrounds and in support of the rising movement for universal brotherhood. Many many more are named and described in this collection: engineered languages, mystical languages, jargons, and even artistic languages (not only literary ones).

But the most fascinating part of this story is the logical and philosophical bustle, devised in the form of finding the rules of a code, which began in the Middle Ages in Europe as a search for the perfect language, based on established principles and accountable for the entire human knowledge.

As this idea was nurtured mostly within Western culture, it started out as a search for the language that the god of the Holy Bible gave to Adam, which also brought with it the knowledge of all things. That was a language that provided a name for everything (whether substance or accident) and according to which there should be a thing for every name.

The myth of the Platonic demiurge also emerged from classical Greek culture, but in the form of a father who orders and shapes the world, rather than a son who inherits it. Both myths were raised from the need to introduce a unitary principle capable of overcoming the rigid dualism between the world of ideas and sensible reality.

This goal belongs to language, of course. A pristine, primeval language, which should present itself as an instrument of domination, of conquest, and be therefore perfect.

On the one hand, this relentless work is also the story of many failures. And in fact, it is linked to another myth, that of the confusio linguarum following the collapse of the Tower of Babel. Raimon Llull's Ars Magna, founded on the study of Biblical Hebrew and Kabbalah, was the first failed recovery attempt of Adam's language.

Since seventeenth-century scholars who took an interest in conlangs (such as John Wilkins and Wilhelm Leibniz) already admitted that Adam's language cannot be recovered, research focused on trying to create a similar one, in which knowledge is represented by means of a direct method, where the sign corresponds to the referent like an emblem.

But in such an a-priori language (that is created out of nothing) one has to find an element of the sign-content for each element of the sign-expression (what Boris Hjemslev calls functives). And this comes from the fact that this language, not being based on the double articulation such as natural languages (in which meaningless sounds combine to form meaningful words), requires instead that the slightest change in sound causes also a change in meaning.

Creating a language from the composition of a limited number of traits is the precondition for achieving the perfect isomorphism between expression and content, but it is impossible because substances are finite, while accidents are infinite.

On the other hand, every attempt to create an encryption, a path to access the information brought by an ideal language, also called its specific 'genius', turned out to be unsuccessful. The user who speaks it as a natural idiom should have already memorised all this information to master the language itself, but this is exactly what is also required of someone who speaks a natural language, for example using the word dog, or chien, or Hund, or perro, as they should refer to a notion stored in their mind and/or a reference in the external world. So what would be the benefit of switching to the perfect language? Why would it be perfect?

From the late 17th century onwards, some trends already internal to the doctrine strengthened and took separate routes.

I. Some scholars, inspired by the studies of Leibniz, developed operating rules that could be applied to a given vocabulary, whether it was invented from scratch or inspired by those of existing languages.

The scientific method applied to the study of the many existing languages began to spread, and the existence of universal grammar was postulated. This grammar wouldn't be evident but should be discovered below the surface of human languages, and was supposed to control every natural language. This vision actually laid the foundations for the generative grammars that began to be researched and published in the second half of the 20th century, even if they are traced back - as far as remote inspiration - to the Cartesianism of Port-Royal (1660).

II. In an invented language, words can be chosen to name things according to their nature (for example by forging new onomatopoeias), or according to human convention. Many conlangs from the last three centuries were aimed at matching signs and reality or signs and corresponding concepts. And this happened also for the rules of use, or pragmatics, which is what a speaker implies and a listener infers based on contributing factors like the situational context. These rules should conform to the multiplicity of meanings, thus some constructed languages tried to exclude this variety of pragmatic aspects, while others strove to take over them.

III. The growing interest in existing languages also instilled the doubt that the myth of the Tower of Babel should no longer be seen as a sentence to serve, but as the source of the millennial history of different peoples and cultures. It is no coincidence that a painter like Bruegel had already taken possession of it, as he was always interested in representing the destiny of mankind alongside its work (and work tools, pulleys, plumb lines, compasses, winches, and so on, whose names noticeably vary from language to by language, indeed).

Pieter Bruegel the Elder - c. 1563 - cc Wikipedia

The celebration of the genius of languages - and of the profound unity between a nation and its own language - shapes the subsequent European cultural history and channels it towards a new awareness: that the natural differentiation and separation of languages is the positive phenomenon that has allowed the birth of settlements, of cities, of the feeling of community. This reluctance towards polyglotism and multiculturalism constitutes a strong root of closure in one's own cultural roots, and still survives today in every part of the world in the form of 'cultural' opposition against the mixture of different peoples, bearers of different legacies.

Alongside the proliferation of constructed auxiliary languages, which made their way into society from the 19th century onwards, a remarkably large number of conlangs have been devised for literature and, more recently, the film industry.

Many of these conlangs and many constructed auxiliary languages are a-posteriori: they propose grammar rules and vocabulary inspired by existing languages.

Although the purpose of creating these literary languages a-posteriori is not exactly to present themselves as a vehicle for cultural exchange, they lack a valid philosophical approach, so to speak. They do not solve the problem of linguistic relativism, which is that different languages arrange the meaning in different and mutually incommensurable ways. It is taken for granted that expressions that are somehow synonymous from language to language exist, and that there is always a possibility of 'direct' translation between terms and propositions of two different languages. They are almost always 'west-centered', because their model of organizing the meaning lies in the Western logic tradition.

It is also true that there are many historical reasons for the current hegemony of English, and that even a vehicular language based on Indo-European languages (such as Esperanto) or precisely on English (which is rich in monosyllables, has a typology in many respects isolating, and is open to many loanwords from other languages) could work as an international language for the same reasons for which, in past centuries, this function was performed by natural-historical languages such as Greek, Latin, French or Swahili.

But history teaches us that a civilization with an international language does not suffer from the multiplicity of languages. It's worth it to recall the fate of Greek (with the koine, within the Hellenistic kingdoms and the Eastern Roman Empire) and of Latin (in Western Christian culture).

A final reflection on contemporaneity. The languages that we speak today with computers are codes that do not reach the dignity of language because they have just one syntax - simple but strict. They are parasitic languages of other languages as to the meanings that are assigned to their otherwise empty symbols.

However, they are universal codes, equally understandable to speakers of different languages, and perfect, as they contain no errors or ambiguities.

They are also a-priori conlangs because they are based on grammatical rules that are different from the superficial rules of historical-natural languages. They convey a presumed grammar common to all languages. And they assume that this grammar is deep because it aligns with the logical laws of men and also with those of machines. But they are limited because, according to many scholars, they are anchored to the Western logical tradition built on the structure of the Indo-European languages, and they do not allow to tell everything that can be told with a natural-historical language.

The dream of a perfect language in which all the meanings of the terms of a historical-natural language can be defined and which allows meaningful exchanges between man and machine returns in the research on artificial intelligence. Generative software, i.e. Chat GPT, receives inference rules on which basis it can weigh up the coherence of a story that has been given and in turn 'extract' some consequences. In other words, the ability to use logical connectors of the type if...then has been transferred to it (from a few decades ago, as this is how the Basic or Pascal syntax worked).

On the surface, it looks like Chat GPT is able to crochet a story from very few prompts. These prompts are the elementary, or 'primitive' components of scripts that supply the 'thinking' machine with patterns of action and situations, and the rest is statistically generated.

A bell is ringing here. All this would not have been possible without the age-old search for a perfect, philosophical, a-priori language. What happens to the search around the semantics remains omitted, but that's another story.

In 1854 George Boole said that the aim of his research was to investigate the fundamental laws of those mental operations by means of which reasoning is carried out; Boole pointed out that we humans could not easily understand how the innumerable languages of the Earth have been able to preserve so many common characteristics over the centuries if they were not all rooted in the very laws of the mind. When Noam Chomsky talks about Chat GPT today in terms of 'plagiarism', he is seemingly avoiding addressing an old question that he himself helped to renew.